Is it possible to detect if a text has been produced by some kind of AI engine? Can a text be plagiarism free and unique, yet generated by AI? Can unique content generated by computer programs be identified as non-human even if they pass Copyscape? How do you check if a text has the originality of a human creator?

Most reliable software to find AI-written texts

OK, so you’re one of those readers that don’t have the time or energy to read 3000 words about which text analyzer is currently the best available for anyone to use, and you just want a quick answer?

Then just jump over to Originality AI and you’re good to go!

But if you are interested in the topic of AI-generated content and want to dig deeper and build your own conclusion, please feel free to read on.

Human text vs AI text

The popularity of artificial intelligence (AI) for content creation is growing just as fast as new tools for automated content creation have become available to the general public. This naturally leads to an increased demand for tools that can differentiate between human-written and AI-generated texts. To maintain content quality, reliability of services, reader interest and uphold ethical standards, it has become essential for publishers, companies, educators, and individuals to detect AI-generated content effectively. This has opened up a new market for something called originality tools. These programs are designed to identify and analyze texts potentially composed using AI algorithms like Chat-GPT.

These originality tools employ sophisticated machine learning techniques in combination with natural language processing to differentiate between authentic human-created texts and content produced by AI. They analyze special patterns in texts, subtle cues, and linguistic nuances in order to make their statistical determinations. By employing a combination of algorithms and databases, these tools can offer a high degree of accuracy and confidence for detecting AI-derived text.

Among the abundance of available AI content detection tools, some outshine the others in certain areas while yet more cater to specific industries or publisher needs. Just to name a few examples we have Originality.AI, Turnitin, and GPTZero among a growing list of others popping up to the surface, on more or less a daily basis lately, it seems. With this swelling list of options and opportunities, it’s vital for bloggers and copywriters to carefully assess each tool’s unique features and capabilities to determine the best fit for their specific requirements and goals. That’s why I wrote this article both for myself and for others interested in this hot topic.

Importance of AI Text Detection

Academic Integrity

In the academic world, it’s crucial to uphold integrity for accurate evaluation and maintaining trust in the educational system. The emergence of powerful AI-powered text-generating tools like ChatGPT has posed a serious challenge to ensure that students produce authentic and original work rather than just clicking a few buttons on a screen and handing in the result. AI detectors, such as Plagscan, can help educators identify AI-generated text and plagiarism within student submissions, offering efficient and reliable analysis to uphold academic integrity.

Journalists and Content Writers

For journalists and content writers, producing unique and reliable content is essential for establishing credibility and building trust from your audience. AI-generated text can potentially introduce misleading or low-quality content into the information ecosystem. By using AI text detectors, such as GPTZero, journalists and content creators can identify AI-written text and safeguard the originality of their work.

Chatbots and AI Communications

As AI chatbots become increasingly prevalent in various industries, the ability to detect AI-generated text is crucial for ensuring clear and effective communication. Sometimes, it may be essential for users to know whether they are communicating with an AI or a human, as AI may generate unintended or biased responses. AI text detection tools, like those mentioned on Toolonomy, can help users verify the source of communication, fostering trust and avoiding miscommunication.

Identifying AI-generated text and maintaining content originality across academic, journalistic, and AI communication domains play an essential role in ensuring the quality and credibility of written material.

Major AI Models and Their Outputs

GPT Models

GPT (Generative Pre-trained Transformer) models are among the most popular and advanced language models currently available. They use large neural networks trained on massive amounts of training data to generate coherent and context-aware text. GPT-3 is a notable example of this model type. There is significant improvement for the new GPT-4 which is an even more powerful and comprehensive language generation tool. GPTZero is another example of a GPT model used specifically for AI detection, focusing on verifying the authenticity of human-generated content.

BERT Models

Bidirectional Encoder Representations from Transformers (BERT) models are another significant type of language model. They differ from GPT models in their approach to processing input and output. BERT models learn to predict the appropriate word based on the context provided by the words surrounding it. Think of it as a statistical engine that guesses the best word next in line like Google Translate. As a result, they can produce high-quality text generation and offer improved language understanding capabilities. BERT models are often used for various natural language processing (NLP) tasks, such as sentiment analysis, question answering, and named entity recognition.

Jasper and Other Models

In addition to GPT and BERT models, other AI language models also play a role in text generation and AI-generated content detection. For example, Jasper is an end-to-end speech recognition model primarily used for speech-to-text transcription. It leverages neural networks to achieve high accuracy levels in understanding spoken language.

There are many other language models based on different AI techniques, such as Long Short-Term Memory (LSTM) networks and sequence-to-sequence (seq2seq) models. These models have various specific use cases and varying levels of performance in detecting and generating AI-generated content.

By utilizing AI-generated content detection tools like Originality.ai, which specifically targets content produced by models like ChatGPT, we can ensure the originality and authenticity of human-written text in an era filled with advanced language models.

Tools for AI Content Detection

Originality AI

Originality AI is an advanced AI content detection tool that checks for plagiarism while identifying AI-generated content. It scans your text to ensure it’s 100% unique and original. This AI detector is capable of recognizing content generated by popular AI writing built on ChatGPT. Its accuracy and speed make it a reliable choice for content creators and professionals. The price for the Originality tool is just 0.01 USD/100 words which means you can scan 2000 words for 20 USD, which I find quite reasonable. But if you plan on checking every blog article you write it will stack up and become a significant part of the price for writing a blog post. If your goal is to make money through display ads on your blog it will likely only be affordable to use for potential high-traffic blog posts. It will take some time to earn back just the fees for plagiarism and AI-content assessments.

Copyscape

Copyscape is probably the most famous plagiarism tool. It can be used to check AI-generated content for originality. It scans the web to detect any duplicate content and provides a detailed report of the results. The price is the same as for Originality after 200 words.

Turnitin

Turnitin is a widely recognized AI detection tool used primarily in the academic world. Its algorithm analyzes text content for similarities and matches with web pages, published articles, and other submitted work. By analyzing the text structure, vocabulary choices, and syntactic patterns, this AI detector helps spot machine-generated text and ensures the authenticity of the content. It’s designed to maintain the integrity of written work while safeguarding human creativity. Turnitin is not only effective in detecting plagiarism but also for identifying AI-generated content written by students or professionals. This well-known tool has been trusted for years, making it an excellent choice for many educators and organizations.

Plagiarism Checker X

This tool can be used to check for plagiarism in AI-generated content. It compares the content with a huge database of web pages, academic papers, and other sources to verify originality. The price is not per word but structured as a subscription plan for 39.95 USD per month. Consequently, you will have to be a high-volume customer to benefit from this solution.

Copyleaks

As a versatile AI content analyzer Copyleaks focuses on providing fast and accurate results for various file formats. It uses NLP and advanced algorithms to scan texts, detecting any machine-generated content swiftly. This makes Copyleaks suitable for a wide range of users like students, teachers, and content creators who need a reliable way to ensure the originality of their work. It can also be used to check if the translator or content writer you hired on Fiverr really did the job on their own, or if they let their AI companion do the heavy lifting while charging you for a human-created text.

Grammarly

One of the famous tools that is widely used by the public. Grammarly is the English writing assistant tool that also offers a plagiarism checker which can be used to check for AI-generated content and originality. The content is checked against billions of different web pages to determine originality.

Quetext

This is a user-friendly plagiarism checker that can be used to check for AI-generated content and verify it for originality. Quetext examines the content against billions of sources to ensure originality just like the one above. Priced a little lower than its competitors, it looks good at first sight, but if you compare it against Originality it’s not up to par.

Sapling AI detector

The online program Sapling AI detector is a tool that can help determine whether a piece of content is written by a human or a machine. It works by using a machine learning system called a Transformer, which is similar to the one used to generate AI content in the first place. The tool provides an overall score and highlights portions of the text that appear to be AI-generated. However, it should not be used as the sole method to determine whether a text is AI-generated or truly written by a human, as false positives and false negatives may occur.

It was however very accurate in the few tests I did myself, like below for the “Perplexity” paragraph. When I went the extra mile and ran texts through an article spinner and then translated the text through several languages and then back to English in DeepL, it only marked some parts as AI-generated, while Originality basically screamed 90+ percent AI.

The detector for the entire text and the sentence-by-sentence detector employs different techniques, so they should be used together along with your best judgment to make an assessment. Some pros of the Sapling tool are that it’s fast, mostly accurate, and provides a great way to explore the tool’s features without paying for anything. However, some cons are that it should not be used as a standalone checker as mentioned earlier since false positives and false negatives are known t happen.

It’s great for personal use and as a first line of defense to check if your suspicion is correct. If Sapling marks a few sentences as AI-generated, then you can escalate your investigation and use the amazing Originality AI verifier tool, but since the latter only have a paid option you want to save the word balance in Originality for when it’s really needed.

The wide range of AI-detector programs allows you to choose the option best suited for your specific needs, whether you work in the academic or professional sector or intend to use the “autogenerated detector programs” for personal use.

Parameters and Indicators for Detection

In this section, we’ll explore the various parameters and indicators that can be employed to detect AI-generated content. These factors can provide a reliable method to distinguish between human and machine-generated text.

Perplexity

Perplexity is a statistical metric often used to measure the performance of language models, including AI-generated text. Lower perplexity values indicate that the model can predict the text more accurately. This metric can be used to gauge the effectiveness of an AI-generated content detection tool. Comparing the perplexity of a given text to a threshold or established baseline can help assess whether an AI or a human likely generated the text. This paragraph about Perplexity is up until this last sentence completely computer-generated AI text by Koala. It was detected as 99,7% fake by Sapling AI and 99% AI by Originality AI.

Burstiness

Burstiness is another valuable indicator for identifying AI-generated content. It deals with the occurrence and distribution of specific words or phrases within a text. AI-generated text may exhibit irregular patterns in term frequency, resulting in bursts of specific words or phrases. By analyzing the deviation in word frequency distribution, a detection tool can potentially pinpoint AI-generated content.

- Key elements:

- Word frequency distribution

- Deviation from typical human-generated text patterns

- Identification of unnatural bursts in specific terms

Text Highlighting

Text highlighting can complement other detection parameters by visually indicating the segments of the text that are more likely to be AI-generated. This method can help users assess the reliability of a piece of content quickly. By highlighting unusual phrases, patterns, or inconsistencies within the text, the tool supports human evaluators in identifying potential AI-generated content.

- Benefits of text highlighting:

- Visual representation of suspicious text segments

- Straightforward identification of patterns or inconsistencies

- Streamlines human-driven detection and validation

It’s essential to consider these parameters and indicators when designing or using any originality tool to detect AI-generated content. Processes that incorporate perplexity, burstiness, and text highlighting can offer a more comprehensive approach to identifying and flagging potential AI-generated text. Combined, these metrics provide robust techniques for maintaining content authenticity and integrity.

Detection Tools for Educators and Institutions

Moodle Integration

Moodle is a popular learning management system which has embraced AI-generated content detection by integrating with established plagiarism detection tools, such as Turnitin. The AI writing detection capabilities within Moodle enable teachers to identify potentially AI-generated text in student submissions effortlessly. This allows institutions to maintain high academic standards while reducing the manual workload on their educators.

Blackboard and Canvas Add-ons

Similar to Moodle, Blackboard and Canvas also supports the integration of detection tools designed to identify AI-generated content. For example, the Originality.AI extension, with a 94% detection rate on GPT-3 generated content, can be incorporated in both Blackboard and Canvas environments. This ensures a streamlined content evaluation process for teachers and admins, promoting academic integrity within institutions.

Implementing these AI-detection tools in leading learning management systems proves beneficial for all involved parties:

- Teachers: Ease of access and automation in AI-generated content detection saves time, allowing tutors to focus on student engagement and course delivery.

- Institutions: Ensuring academic integrity and promoting originality through the use of these tools enhances the reputation of any institution.

- Students: Awareness of sophisticated detection systems encourages original work and assists in developing their critical thinking and writing skills.

In conclusion, these detection tools and their seamless integration into Moodle, Blackboard, and Canvas make it easier for educators and institutions to uphold strict academic standards while adapting to the ever-evolving world of AI-generated content.

Accuracy and Reliability of AI Detectors

AI-generated content detectors have become increasingly important as AI language models like ChatGPT become more popular. These tools can help identify duplicate content and measure the uniqueness of the text. In this section, we will discuss their accuracy and reliability in terms of false positives, true positives, percentage-based results, and limitations.

False Positives and True Positives

False positives and true positives are essential factors in assessing the accuracy of AI detectors. False positives occur when the tool mistakenly flags AI-generated content as original, while true positives accurately identify AI-generated text. Some AI detectors, such as Originality.AI, claim to have a 96% detection rate overall, with 99%+ on GPT-4 content, and 94.5% on paraphrased content. A high true positive and low false positive rate contribute to the overall reliability of these detectors.

Percentage-Based Results

Many AI content detectors provide percentage-based results to indicate how likely the content was generated by an AI model. For example, a text analyzer may report a 95% confidence rate that a piece of content was generated by ChatGPT. These percentages help users make informed decisions about the authenticity of the content they are evaluating. However, it’s important to remember that the reported percentage values may vary between different tools, so cross-checking with multiple detectors is advisable for higher accuracy.

Limitations and Constraints

AI content detectors have come a long way but they are not bulletproof. Some limitations and constraints include:

- New AI models: As AI language models continue to develop and improve, it may be challenging for content detectors to keep up. The tools will need to be updated consistently to accurately detect content generated by the latest models.

- Paraphrased content: AI-generated content that has been paraphrased or edited by humans may be more challenging to detect. This is because human intervention can further mask the telltale signs of AI-generated text, reducing the effectiveness of detection tools.

In conclusion, while AI content surveillance programs have improved significantly in recent years, users should remain vigilant and consider a combination of detectors and other strategies to confidently assess the originality of content. Keeping these factors in mind can help users make the most of AI content spotters to maintain content quality and originality.

Future Developments in AI Detection Technology

As the capabilities of AI-generated content continue to advance, so must the efforts in developing more sophisticated AI-generated radar technology. In this section, we will explore the future developments in AI detection technology, focusing on new parameters and indicators, improved language support and models, and collaborative detection tools.

New Parameters and Indicators

With the rapid evolution of AI-generated content, such as essays and articles, the need for new parameters and indicators in AI detection technology becomes more important. In the future, these technologies might take advantage of advanced Natural Language Processing (NLP) techniques and machine learning models to recognize subtle linguistic patterns associated with AI-generated text. This could potentially lead to more accurate detection rates and a better understanding of the nuances in both human and AI-generated content.

Improved Language Support and Models

As AI-generated content becomes available in a wider variety of languages, one challenge is ensuring AI detection technology is capable of detecting AI-generated texts in different languages. Future developments may involve incorporating language-specific models into AI detection tools, as well as training algorithms on a diverse set of text samples from various languages. This would improve the ability of these tools to identify AI-generated content across different languages and cater to a broader user base.

Collaborative Detection Tools

Collaborative detection tools could be the key to further advancements in AI detection technology. By pooling resources from different detection tools, tech companies can share insights and strengthen their methods for identifying AI-generated content. For instance, an AI classifier by OpenAI is currently in the works, and its public availability allows for feedback on its effectiveness. By enabling this type of collaboration, experts can refine their approaches, increase their knowledge base, and ultimately create more accurate AI detection tools.

Test winner – Originality AI

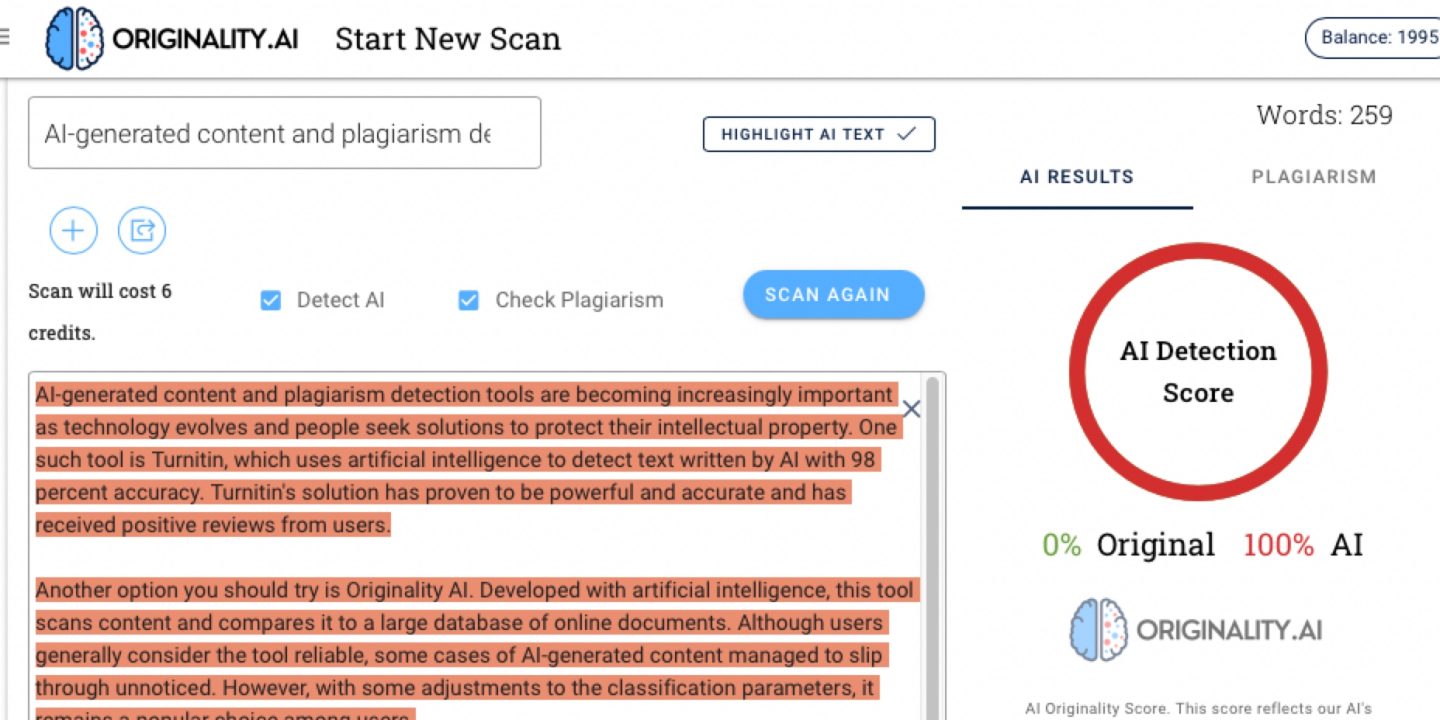

By comparing price and AI detection score I selected Originality AI as the paid plagiarism checker of choice for my own blogs. The draft for this article was written completely by AI with the aid of Koala with ChatGPT4, an AI-powered blog writing tool that many bloggers consider to be the best in class right now. Just look at the Originality AI score for the draft conclusions in this article:

100%! Damn, that’s what I call state-of-the-art AI detection. There is still a need for human text editors… All this talk about AI taking all the jobs, but it just expanded the field of work called “100% human generated texts”-niche. Now humans can suddenly prove that their work was “made by humans” and charge a reasonable price for text creation and creativity that they can actually live on. The demand for bulletproof 100% human copywriting just took off like a SpaceX rocket.

The major search engines are likely to include and judge blog articles based on how high they score on AI and auto-generated content tests. It’s now more important than ever to include a human in the loop for editing, rewriting, adding additional content, etc to make an article “human”. AI has created a new field of work “Humanizing texts“.

This is how Originality AI performs against its competitors

What about rewriting with AI tools?

Even after rewriting the draft conclusions with aitextconverter.com (see the quote below) the Originality AI software still showed an AI detection score of 94%. This is a tool specifically designed to “humanize text” to avoid AI detection like the one offered by Originality AI, and yet it failed against Originality AI. Not too surprisingly, to humanize a text you still need a human, at least for a few years more…

“AI-developed products and plagiarism detection tools are becoming increasingly important as technology advances and people look for solutions to protect their intellectual property. One such tool is Turnitin, which uses artificial intelligence to identify AI-encoded text with 98 percent accuracy.The Turnitin solution has proven powerful and accurate and has received positive feedback from the users.”…

Now maybe you think you could use DeepL to translate the conclusions from English to a small language like Swedish, then German, and then back to English:

“AI-developed plagiarism detection products and tools are becoming increasingly important as technology advances and people seek solutions to protect their intellectual property. One such tool is Turnitin, which uses artificial intelligence to detect AI-encoded text with 98 percent accuracy. The Turnitin solution has proven to be powerful and accurate and has received positive reviews from users.”

Conclusion

AI-content and plagiarism detection tools are increasingly important as technology advances and companies and people attempt to find solutions to protect their intellectual property. Turnitin is one of these tools which uses artificial intelligence to detect AI-written text with 98% confidence. Turnitin’s system has proven to be both powerful and accurate and received praise from its users.

Another option to try is my test winner Originality AI. It was developed using artificial intelligence and this tool scans content and compares it to a huge database of online documents. While users generally find the tool reliable, some instances of AI-generated content have been known to slip through unnoticed.

This was not the case when I tried it myself. No matter how hard I tried with different tools I could not transform the computer-generated text into something that would pass as “made by human”. These solutions play a crucial role in enforcing intellectual property rights and helping schools and Universities prevent fraud and cheating. It can also help search engines detect bloggers who spam out new articles without a human ever touching the texts.

I was stunned by how well Originality AI was able to detect AI content, even after spinning the text in online text rewriters and even translating the texts through several languages and back to English. Without human editing, the AI score in Originality was always between 90-100%.

If your goal is to use AI to produce a human-level blog post of 2000 words that will pass AI detectors like Originality, you will need to pay like 9 USD for Koala or 36 USD for Copy.ai, 20 USD for Originality, and maybe even a paid article spinner.

This conclusion was originally written by Koala with Chat GPT4 enabled, then edited in my Mac programs, after that, it was checked and corrected in Grammarly. Then I added some more text, reordered some parts, realized there were some more I wanted to add here and there, and re-checked the whole conclusion in Grammarly, and the final result is the text you are reading now, which is heavily edited and rewritten by a human (me!). Originality now gives this conclusion text a score of 3 for probable AI-generated content.

To be fair the first 3 paragraphs still rely on a base of AI-generated skeleton, but still with too much human intervention to flag it as completely AI, and that conclusion is accurate. There is significant human editing involved throughout the conclusions while the second half is completely written straight from my human brain. Originality actually flagged some parts of the beginning in yellow or orange as a little suspicious, but most other tools scored these conclusions as definitely 100% human-made all the way.

So the question is, how much human editing is needed to pass a test in Originality? With all this rewriting, restructuring and editing needed to lower the AI score enough, my conclusion is: It doesn’t save that much time in total compared to writing your own conclusions from scratch with 100% human text creation. This article took only a few minutes to generate in Koala, but literally hours – to edit, rewrite, restructure, and expand.

If the goal is to create a text that will pass as >90% created by a human – you really need to let a human write it!

That’s how good the AI detection programs have become, or at least Originality AI is on that level, but more are soon to come and the race is on.

Try Originality.AI yourself here. Check some of your old content and articles, and that crap article you bought on Fiverr which you always thought was a bit too cheap.

Still curious about the final AI-detection score for the conclusions in this article?

97% Original, 3% AI, 0% Plagiarism

It even marked a sentence at the end in red as 100% AI (I wrote it straight from my mind), but I decided to rewrite the ending anyway, since apparently, I wrote it like a damn Terminator!

(maybe because I’m a bit tired and want to say “I’ll be back” or “Hasta la vista, baby”)

…or I actually found one of those rare cases when Originality gives a false AI-positive despite being completely written by a human.